|

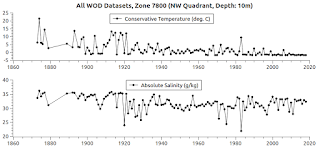

| Fig. 1 |

"The ways scientists study the oceans become more and more sophisticated over time. To keep pace with their curiosity, oceanographers must often invent devices capable of helping them observe very specific phenomena. Here are the top five new instruments in the Scripps arsenal that are transforming the way we see the Blue Planet.(Five New Instruments Keeping Oceanography Fun, emphasis added). The early "instruments" (e.g. a bucket) seem utterly crude now, but they were once the only game in town:

...

A network of more than 3,500 floats distributed more or less evenly throughout the world’s oceans is in the midst of transforming how we understand them. Called Argo, the network’s existence was enabled in the late 1980s by floats developed by Scripps scientists known by the acronym SOLO. Now SOLOs and similar types of Argo floats record the “vital signs” of the oceans: temperature, salinity, and current speed and direction. Collectively they are enabling oceanographers to observe oceans at global scales and will over time provide complete records of cycles that occur over decades."

"Water sampling devices range from a bucket dropped over the side of a ship to large water bottles sent thousands of meters toward the seafloor on a wire. Probably the most commonly used water sampler is known as a CTD/rosette: it is a framework designed to carry 12 to 36 sampling bottles(Seagoing Tools of Oceanography, emphasis added). That reality does not apply only to the means of gathering samples, it also applies to the way the samples are analyzed after being gathered:(typically ranging from 1.2- to 30-liter capacity) and a conductivity/ temperature/ depth sensor that sends information to the laboratory so that the water bottles can be closed selectively as the instrument ascends. A standard rosette/CTD cast, depending on water depth, requires two to five hours of station time. New methods for this kind of sampling are being developed in order to reduce station time. The largest water bottles, called Gerard barrels, collect 250 liters. Particles in the water samples may be quantified with a transmissometer sent down the wire or attached to a CTD/rosette. Aboard the ship, a flow cytometer may be used to analyze particles in the form of single-celled organisms for optical properties indicative of their physiology and structure."

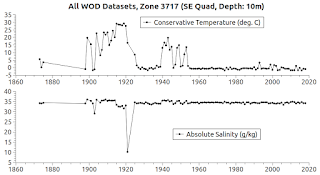

Fig. 2 circa 1870 - 1929

"As one of few who have been involved in the equation of state of seawater over the last 40 years, I was invited to review some of the history behind its early development and also the more recent thermodynamic equation of state. The article first reviews early (late 1800s) work by Knudsen and others in(History of the Equation of State of Seawater, emphasis added). We tend to think of salinity as a measurement, such as "34.503," rather than thinking of salinity as "a concept."defining the concept of salinity. This summary leads into the development of the practical salinity scale. Our studies at the University of Miami Rosenstiel School, along with the work of Alain Poisson’s group at Laboratoire de Physique et Chimie, Université Pierre et Marie Curie, and that of Alvin Bradshaw and Karl Schleicher at Woods Hole Oceanographic Institution, were instrumental in deriving the 1980 equation of state (EOS-80) that has been used for 30 years. The fundamental work of Ranier Feistel at Leibniz Institute for Baltic Sea Research led to the development of a Gibbs free energy function that is the backbone of the new thermodynamic equation of state (TEOS-10). It can be used to determine all of the thermodynamic properties of seawater."

Fig. 3 1930 - 1959

|

| Fig. 4 1960 - 2018 |

In the most modern sense it is a concept of calculation now called "Absolute Salinity."

That term applies in the equation of state formulas encapsulated in TEOS-10, which replaced EOS-80, as that paper above points out.

If you really want to immerse your curiosity within this subject, here are some more sources of information (History of Oceanography 1, History of Oceanography 2, Ocean Exploration 1801-1900).

Meanwhile, I must move on to today's graphs.

They will show you some of the "footprints" of the history of measuring.

It could be said that they contain footprints of history in graph format.

Let's begin with the graph at Fig. 1 which shows a span of time from circa 1870 to the present.

That graph shows major measurement undulations in the late 1800's and early 1900's.

The other graphs (Fig. 2 - Fig. 4) are sections of the main Fig. 1 graph.

The graph at Fig. 2 is a section of the main graph, focusing on the time frame of the late 1800's and early 1900's.

The graph at Fig. 3 is another section of the main graph, focusing on the 1930 - 1959 time frame.

Finally, the graph at Fig. 4 focuses on the span of time from 1960 - 2018.

Notice how the degree of the measurement undulations tend to subside with time.

Are those undulations in Fig. 2, which subside substantially by the time shown in Fig. 4, the result of meager instruments developing into robust ones, a smaller number of measurements, or both?

In the main, it is probably the latter ("both").

The next post in this series is here, the previous post in this series is here.